LeetCode Problem – Duplicate Integer: How to Spot Duplicates in an Array

Alright, let’s talk about duplicates. You’ve got an array of numbers, and your job is to figure out if any of those numbers make more than one appearance. Seems simple, right? Well, as straightforward as it may sound, this is one of those classic coding problems that gets right to the heart of efficient problem-solving.

Think of it as finding the “repeat offenders” in a room full of people—some might look innocent, but there’s always that one number lurking around more than once. Our mission? Identify the duplicate without making this process more painful than it needs to be.

Breaking It Down – The “Where Did My Socks Go?” Analogy

Imagine you’ve got a laundry basket full of socks. Now, one morning, you reach in to find your favorite pair, but then, to your horror, you realize you’ve got an extra left sock! Duplicates. Somewhere in that pile, a pair of socks snuck in twice. Now, your task is simple: find that extra sock (the duplicate) before you leave the house wearing two different ones.

The problem is exactly that: we need to spot if any element in the array appears more than once.

The Naive Approach – “Why Not Just Compare Everything?”

Let’s start with brute force. We could just compare each element to every other element. It’s not efficient, but it’s a straightforward way to check for duplicates.

Code Example:

public bool bCheckForDuplicateValues(int[] aiNums)

{

// Loop through each number in the array

for (int iIndexOuter = 0; iIndexOuter < aiNums.Length; iIndexOuter++)

{

// Compare current number with every number after it

for (int iIndexInner = iIndexOuter + 1; iIndexInner < aiNums.Length; iIndexInner++)

{

// If two numbers match, return true

if (aiNums[iIndexOuter] == aiNums[iIndexInner])

{

// Duplicate found!

return true;

}

}

}

// No duplicates found

return false;

}Why This Is a Bad Idea:

- Time Complexity: O(n2). Nested loops mean a lot of unnecessary work.

- Space Complexity: O(1). At least we’re not hogging any extra memory.

This approach works but scales poorly. It’s like trying to find a duplicate sock by checking every pair in your drawer. You’ll get the job done eventually, but there are better ways.

The HashSet Approach – Keeping Track Efficiently

Let’s get smart about this. Instead of comparing everything, what if we keep a record of every number we’ve already seen? That’s where a HashSet comes in. It lets us quickly check whether we’ve encountered a number before.

Code Example:

public bool bCheckForDuplicateValuesWithHashSet(int[] aiNums)

{

HashSet<int> isetSeenValues = new HashSet<int>();

foreach (int iNumValue in aiNums)

{

// If the value is already in the set, return true

if (isetSeenValues.Contains(iNumValue))

{

// Duplicate found!

return true;

}

// Add the current value to the set

isetSeenValues.Add(iNumValue);

}

// No duplicates found

return false;

}Why This Is Better:

- Time Complexity: O(n). We check each number once.

- Space Complexity: O(n). The HashSet uses extra space, but it’s more efficient overall.

This is a significant improvement over brute force. By using a set, we avoid unnecessary comparisons and get faster results.

The Sorted Array Approach – Optimizing Without Extra Memory

If you don’t want to use extra space, sorting the array first is another great solution. Once the array is sorted, duplicates will always be adjacent, and you can just check each element against its neighbor.

Code Example:

public bool bCheckForDuplicateValues(int[] aiNums)

{

// Sort the array first

Array.Sort(aiNums);

// Loop through the sorted array and check adjacent elements

for (int iIndex = 0; iIndex < aiNums.Length - 1; iIndex++)

{

// If two consecutive numbers are the same, return true

if (aiNums[iIndex] == aiNums[iIndex + 1])

{

// Duplicate found!

return true;

}

}

// No duplicates found

return false;

}Pros and Cons:

- Time Complexity: O(n log n). Sorting takes some time, but checking adjacent elements is fast.

- Space Complexity: O(1). No additional memory is used after sorting.

Edge Cases – Watch Out for These Traps!

- Empty Array: If the array is empty, there are no duplicates. Simple as that.

- Single Element Array: One number can’t be a duplicate of itself.

- All Elements Are the Same: If the array consists entirely of the same number, it should immediately return true.

Comparison Table:

| Approach | Time Complexity | Space Complexity | Pros | Cons |

|---|---|---|---|---|

| Brute Force (Nested Loops) | O(n2) | O(1) | Simple, easy to understand | Slow for large arrays |

| HashSet Approach | O(n) | O(n) | Efficient for large arrays | Extra memory usage |

| Sorting Approach | O(n log n) | O(1) | Uses no extra memory after sort | Sorting takes some time |

Final Thoughts – Focus on Understanding

Whether you use brute force, a HashSet, or sorting, what’s important is understanding the logic behind each approach. You don’t need to memorize solutions—you need to know why they work. That way, when you face similar problems, you can confidently tackle them.

Your Turn – Test It Out in Visual Studio!

Ready to try it yourself? Here’s how to set up and test the solution in Visual Studio (not VS Code).

Steps to Run Your Code in Visual Studio:

- Create a New Console App:

- Open Visual Studio.

- Click on File > New > Project.

- Select Console App (.NET Core) and click Next.

- Name your project and click Create.

- Write the Code:

- In the Program.cs file, paste the solution code.

- You can modify the Main method to call your function and test different arrays.

Example:

class Program

{

public static bool bCheckForDuplicateValuesWithHashSet(int[] aiNums)

{

HashSet<int> isetSeenValues = new HashSet<int>();

foreach (int iNumValue in aiNums)

{

// If the value is already in the set, return true

if (isetSeenValues.Contains(iNumValue))

{

// Duplicate found!

return true;

}

// Add the current value to the set

isetSeenValues.Add(iNumValue);

}

// No duplicates found

return false;

}

static void Main(string[] args)

{

// Example array

int[] arrTest = { 1, 2, 3, 4, 5, 1 };

bool bHasDuplicates = bCheckForDuplicateValuesWithHashSet(arrTest);

Console.WriteLine("Contains Duplicates: " + bHasDuplicates);

}

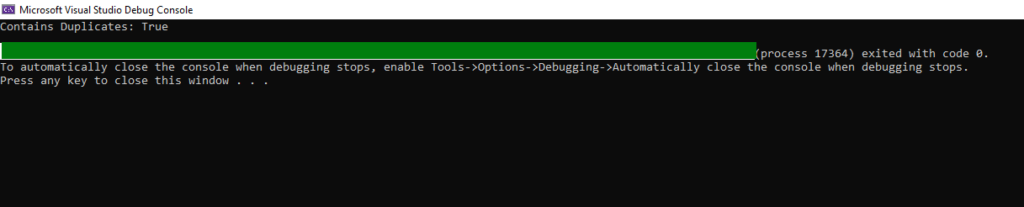

}- Run the Program:

- Press F5 or click the Start button to run your program.

- Check the output in the console window to see if duplicates were found.

- Experiment:

- Modify the test array with different numbers and edge cases (empty arrays, arrays with all the same numbers, etc.) to see how your solution performs.

Bonus Section – Benchmarking Different Approaches

Want to see how each approach performs on large arrays? Try timing each one with different array sizes using Stopwatch in C# to measure the execution time.

Here’s a quick way to get started:

Stopwatch stopwatch = new Stopwatch();

stopwatch.Start();

// Call your function here

stopwatch.Stop();

Console.WriteLine("Elapsed Time: " + stopwatch.ElapsedMilliseconds + " ms");Benchmarking can give you insight into how well each approach scales with larger datasets, and can be a fun way to dig deeper into performance tuning.

You can integrate this into your code like so:

using System.Diagnostics;

class Program

{

public static bool bCheckForDuplicateValuesWithHashSet(int[] aiNums)

{

HashSet<int> isetSeenValues = new HashSet<int>();

foreach (int iNumValue in aiNums)

{

// If the value is already in the set, return true

if (isetSeenValues.Contains(iNumValue))

{

// Duplicate found!

return true;

}

// Add the current value to the set

isetSeenValues.Add(iNumValue);

}

// No duplicates found

return false;

}

static void Main(string[] args)

{

// Example array

int[] arrTest = { 1, 2, 3, 4, 5, 1,10, 23, 1, 43, 100, 7, 10, 6, 7, 32 };

// Create a stopwatch instance

Stopwatch stopwatch = new Stopwatch();

// Start the stopwatch

stopwatch.Start();

bool bHasDuplicates = bCheckForDuplicateValuesWithHashSet(arrTest);

// Stop the stopwatch

stopwatch.Stop();

// Output the result - whether a duplicate exists

Console.WriteLine("Contains Duplicates: " + bHasDuplicates);

// Output the elapsed time in milliseconds

Console.WriteLine("Elapsed Time: " + stopwatch.ElapsedTicks + " Ticks");

}

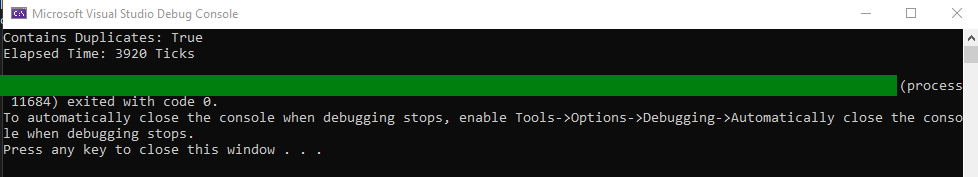

}Now, we’re recording the time elapsed in ticks. Here’s a screenshot of the results:

As you can see, the time elapsed is 3920 ticks, less than 1 millisecond. Fun fact: 1 millisecond is equal to 10,000 ticks (in Microsoft’s world). Don’t believe me? Microsoft backs me up right here.

If you want to bump this up to milliseconds, just increase the array size. Here’s a hint, but I’ll leave the actual integration to you:

// Example of increasing the size of the array

int[] aiTest = new int[100000];

Random rand = new Random();

// Fill the array with random numbers

for (int i = 0; i < aiTest.Length; i++)

{

// Random numbers between 0 and 50,000

aiTest[i] = rand.Next(0, 50000);

}

And if you’re curious about Random.Next, Microsoft’s got you covered here.

Now that you’ve seen how to approach this problem from different angles, it’s time to put your own spin on it. Give it a try, break things, and—most importantly—learn something new. Happy coding!